Marcel and Forecasting Systems

I’ve been spending some time recently thinking about forecasting systems. I was thinking about coming up with one of my own, only to find out that the CAIRO forecasts did essentially everything that I had in mind. There’s a plethora of systems out there—off the top of my head, I can think of about nine—CHONE (which looks like it’s done for, what with Sean Smith now working for a Major League organization), PECOTA, ZiPS, CAIRO, Oliver, and Bill James. There’s a few others out there that don’t seem to get as much play—one of them is Ron Shandler’s forecasts, and I think they’re subscriber only. Mitchel Lichtman, one of the brighter saberists out there (and the creator of UZR) has his own, and a man by the name of John Eric Hanson has done exceptionally well in forecasting challenges in recent years. There’s one forecasting system that typically stands in the middle of the pack, though, and it surprisingly uses very little information to come to its predictions.

If you’re familiar with sabermetrics, you’ve undoubtedly heard of the Marcel forecasts, which were developed by Tom Tango. It is explained as “(…) The minimum level of competence that you should expect from any forecaster.” It is meant to work as a reference point for other forecasting systems—if you’re doing worse than Marcel, you need to tweak your methodology; and if you’re doing just as well as Marcel, you’ve still got some work ahead of you. It works surprisingly well for a system that does so little; and, since I’ve been thinking about the topic so much, thought I’d talk a little bit about how Marcel works.

I should note that I’ve only focused on hitters; I haven’t taken a look at how Marcel handles pitchers—and, I should note that when I recreate the forecasts, I come out with slightly different figures than Tango’s published ones. Why that is, I’m not certain—but the differences are tiny enough to the point where I’m not concerned.

Weighting the Data

All forecasting systems weight multiple years of data whenever possible. Logically, this makes sense—by placing more of an emphasis on the player’s most recent seasons, you’ll be getting a better look at his most recent performance whilst still accounting for possible random variation in the most recent year.

Let’s think of it this way—if we have a player that had a home run per plate appearance of .066 in his most recent season, .025 the previous year and .054 before that, his weighted rate (using Marcel’s standard 5/4/3) would be .049. His year three rate was well above average (35 per 650 PA), his year two well below average (16) and year one outstanding (43). He had two seasons of outstanding power sandwiched around one poor one. In 636 plate appearances, his weighted rate translates into 31 home runs. The player—Carlos Beltrán, in 2007—actually hit 33 the following season. Of course, weighted rates don’t always work well like this—but it certainly lends more accurate insight than a straight average would.

If you’re not sure how a weighted average works, you simply multiply the component by its corresponding weight to find the weighted sum—and then divide by the sum of the weights. That might sound a bit confusing, so I’ll walk you through the Beltrán example:

The home run rates as listed above were .066, .025 and .054. The weighted sum would then be (.066*5) + (.025*4) + (.054*3) = .592. The sum of the weights is simply 5+4+3 = 12. So, our weighted average is .592 / 12 = .049.

Different forecasting systems use different weights—this is just how Marcel handles it. Ideally, you use best-fit weights, which Oliver does.

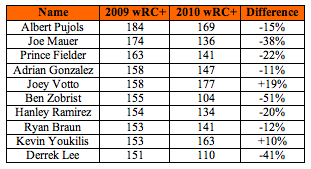

Regression to the Mean

In the table above, you’ll see the top ten hitters in the Major Leagues in 2009 ranked in descending order by wRC+. We’re talking about some insanely good seasons—all players were 50% better than the league average or better, and the best player, Albert Pujols, was nearly twice as good as the league average. And the following year? Only two of the ten players saw an increase in performance—everyone else regressed, by at least 11%. This is why forecasters regress to the mean (“mean,” of course, meaning to their specified population). We cannot assume that a player will perform just as well as he did in his most recent season, and since we want to strip out things like “luck” and random variation, we must regress to the mean in order to estimate the player’s true talent level.

But how much should we regress, and to what population do we regress towards?

Forecasting systems such as CAIRO regress to the positional mean, and I believe PECOTA regresses to the player’s most similar historical comparison. Ideally, you want to regress to the most specific mean you can—so the “perfect” population mean would be players that play the same position, are physically similar, match in age, and have a similar toolset, amongst other variables I forgot to mention. Marcel uses all non-pitchers.

How much we regress is based on two things—the first being how much playing time the player we’re forecasting has—the more time he’s spent in the Majors, the more we know about him and the less we’ll need to regress. Conversely, the less time he’s played, the less we know about him and the more we need to regress in order to generate an estimate of his true talent. Additionally, not every component needs to be regressed the same—certain components stabilize (by “stabilize,” I mean a component reaches an “r” of .5-.7 be it through year-to-year [YTY] correlations or through intraclass [ICC] correlation) faster than others and do not need to be regressed as heavily. Russell Carleton’s work with ICC has been cited often—his findings suggest that a player’s home run rate stabilizes around 300 PA, strikeouts around 150, walks around 200, and so on. So not all components need to be regressed the same. Marcel, being the simple creature that it is, regresses all components the same. Marcel defines reliability as the sum of the weighted plate appearances divided by itself, plus 1200.

David Wright, for example, had 670 PA in 2010, 618 in 2009 and 735 in 2008. Multiply these by the appropriate weights (2010 = 670*5, 2009 = 618*4 and 2008 = 735*3) and you get 8,027. The player’s individual reliability score is calculated as:

r = PA / (PA + 1200)

So, the reliability for Wright would be 8027/(8027 + 1200) = .87. This means that we regress his weighted rates (1 – .87) = 13%. What about a player like Jason Heyward, who has only amassed 623 PA in the Majors? His reliability score would be 3115/(3115 + 1200) = .72, meaning we regress his rates by 28%. I’m not sure exactly where Tango derived the “1200” figure from—but this is the regression to the mean component used in Marcel.

We can figure the regressed rate two separate ways—one assumes we don’t know the player’s reliability (which is most often the case); the other incorporates it into the equation. If we know the reliability of a component and the average plate appearances of the population we’re drawing the reliability from, we can generate a constant that will allow us to regress any player, based on his plate appearances. Let’s say we have an r of .7 for walk rates in a population in which the average player has 300 plate appearances. Our constant is figured as:

Constant = (1 – r) * Average Opps / r

In this case, our constant would be .3*300 / .7 = 129. If a player has 600 PA and a walk rate of 12% where the average is 9%, we regress his rate through the following formula:

Regressed rate = (Rate*PA + League Rate*Constant) / (PA + Constant)

For our example, the player’s regressed rate would be: Regressed rate = (.12*600 + .09*129) / (600 + 129) = .115. For a player with 150 PA and the same walk rate, he would be regressed to a walk rate of .106. For Marcel, “PA” are the weighted sum of the plate appearances and “Constant” is the 1200.

Back to our example of Wright, let’s regress his home run rate using this method. He has a weighted home run rate of .035 and weighted plate appearances of 8,027 in a league that has a home run rate of .027. If we plug in those numbers to the aforementioned equation, we get (.035*8027 + .027*1200) / (8027 + 1200), which comes out to .034. In 670 plate appearances (his weighted average of PA), this translates into 23 home runs.

If we know the player’s reliability, it’s a tad bit easier to find the regressed rate. It is simply:

Regressed rate = (Player r * Player Rate) + ((1 – Player r) * League Rate)

In the case of Wright, this would also give us (.87*.035) + (.13*.027) = .034. Think of regression to the mean as being one part player and one part the population. The more we know about a player, the more we weight the player’s performance—the less we know, the more we weight the population’s performance.

Aging

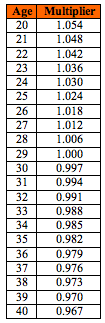

Another thing that forecasting systems take in to account are age adjustments. As a player gets older, he either sees improvement or he declines. Generally speaking, players see an improvement in their performance until about 27-28 years of age and see a steady decline afterwards—and accounting for this helps aid forecasters in their estimate of the player’s true talent. Marcel incorporates a simple blanket aging adjustment, which you’ll see below:

According to Marcel, players peak at the age of 29.

Edit: Tango clarifies:

One point about the “age 29” thing: note that the player is 29 years old, and we are looking at his stats at age 26, 27, 28. So, when I say I don’t apply an aging factor at age 29, it’s because his talent level at age 29 is right in the middle of his talent level at age 26-28 (more or less).

And just like regression, each component has a different aging factor. According to Mitchel Lichtman’s studies, a player’s overall peak performance (based on linear weights) is 27, but home runs plateau from 28-29, walks improve until the age of 34, and strikeouts increase after 29. So, unsurprisingly, other forecasting systems implement component aging patterns into their design. With Wright, his age-adjusted home run rate is left virtually untouched (1.006); his Marcel home run rate is .034*1.006 = .035.

See how simply Marcel really is? All he does is weight three years of data, uses a blanket regression (which is easy to derive) and a blanket age adjustment. And he still manages to hold his own with the big boys.

One thing to note is that Marcel pays absolutely no attention whatsoever to Minor League performance—if the player has no Major League service or anything, Marcel treats him as a league average hitter—nothing more, nothing less. This is where the other forecasting systems differ from Marcel.

I’m sure there are other factors in projection systems that I’ve overlooked, but hopefully my understanding and explanation of them is a reasonable one. There are really three main steps that I’m aware of—weighting the data, regressing it, and adjusting for age—the difference between the systems is how it’s done and how they treat minor league performance.

Excellent article. We have the fantasy relevant CAIRO projections on our website as well as our own projections. If you ever decide to do your own projections, please send me an email and I’ll include you on our site (www.rotochamp.com).

I should note that our pitching projections aren’t quite done, but the hitting projections are about 90% complete. Since you are a diehard SF fan, let me know how the SF hitters look from a lineup and projected AB standpoint.

The pitching projections should be done in the next 10 days or so.

Thanks – Mike

Thanks for stopping by!

Playing time looks about right to me- I think that Pablo has a good chance of accumulating ~560 AB again, although this could change if the Giants sour on him.

In terms of the lineup, I would expect to see something along these lines:

1. Torres

2. Sanchez

3. Posey

4. Huff

5. Burrell

6. Sandoval

7. Tejada

8. Ross

9. Pitcher

I imagine Bruce Bochy is going to pay attention to how the guys are looking in the Spring, too- so we might get a better idea around mid-March.

Thanks for your input. I’ve updated the Giants batting order and adjusted Sandoval’s ABs. Also, we’ve put our pitching projections up on the SF team page. Here’s the link:

http://www.rotochamp.com/players/TeamPage.aspx?Team=SF